1. Purpose

Overall project topics:

- Multi-modality and integration of eye tracking for interactive IR.

- Reinforcement learning for user interest/attention modeling and assistance in information seeking tasks.

- Information presentation and visualization for intelligent inforamtion retrieval.

Specific goals in this study, for interactive IR:

- Learn to track eye movement.

- Learn to identify eye attention.

- Learn to identify and following (changing) interests.

- Learn to predict and provide relevant assistance to foster interactive retrieval.

2. Eye Movement and Attention

Signals from eye tracking device:

- Gaze: position $(x,y)$ and duration $t$

- Dilation

Signals from other input devices:

- Mouse over $(x,y)$

- Mouse click $(x,y)$

- Positions of UI components and visualization

3. Reinforcement Learning

Given the reinforcement learning diagram below:

from graphviz import Digraph

dot = Digraph(comment="Reinforcement Learning", graph_attr={'rankdir':'LR'}, engine='dot')

dot.node('A', 'Agent', shape='circle')

dot.node('E', 'Environment', shape='circle')

dot.edge('A', 'E', label='Action')

dot.edge('E', 'A', label='State')

dot.edge('E', 'A', label='Reward')

dot

Now consider the first task to track and follow eye movement:

Agentis the eye-tracking model that attempts to follow eye movement and identify interests.Environmentrefers to the entire dynamically changing setting where the eye moves and UI (visualization) changes.Stateof the environment such as relative positions of the agent (tracker), eye, and mouse; and their current moving directions/speeds.RewardorPenaltyas a result of the agent's action. For example, while the agent consumes energy in each move (penalty), it gets rewarded by following the eye closely. In addition, it may receive a great reward for predicting a validated click after sufficient eye attention.

Through actions and interactions (state and reward) with the environment, it is possible for an agent to gain knowledge and improve its actions. We refer to as

The

Policy: the method (strategy) an agent uses to act on its knowledge to maximize its long-term rewards.

Q-Learning is a reinforcement learning policy, in which the agent keeps track of rewards associated with each state and action.

| $A_1$ | $A_2$ | $A_3$ | .. | $A_m$ | |

|---|---|---|---|---|---|

| $S_1$ | 0 | 0 | 0 | 0 | |

| $S_2$ | 0 | 0 | 0 | 0 | |

| .. | 0 | 0 | 0 | 0 | |

| $S_n$ | 0 | 0 | 0 | 0 |

where:

- $S_1, S_2, .., S_n$ are all possible states (rows).

- $A_1, A_2, .., A_m$ are all possible actions (columns) for each state.

The Q-table is initiated with $0$ values and gets updated whenever the agent performs an action and receives a reward (or penalty) from the environment.

Suppose at time $t$, the agent is at the state of $s_t$, performs an action $a_t$ and receives a reward of $r_t$.

Here is one strategy to update the Q-table:

\begin{eqnarray} Q'(s_t, a_t) & \leftarrow & (1-\beta) Q(s_t, a_t) + \beta (r_t + \gamma \max_a{Q(s_{t+1},a)}) \end{eqnarray}where:

- $\beta \in [0,1]$ is the learning rate. A greater $\beta$ leads to more aggressive updating.

- $\gamma \in [0,1]$ is the discount factor for future rewards. A greater $\gamma$ tends to put more emphasis on long-term rewards.

An agent learns from trials and errors. So learning requires (random) exploration in the environment. At some points, the agent may gain "sufficient" knowledge to make wiser decisions based on a trained Q-table. However, in order to allow opportunities to continued learning, one should avoid complete exploitation of Q-table for action selection.

For the tradeoff, we introduce a third parameter $\epsilon$ (probability), at time $t$:

- With $\epsilon$ probability, the agent selects a random action.

- With $1 - \epsilon$ probability, the agent selects the action with the maximum reward for state $s_t$ in the Q-table: $a_t = \underset{a}{\operatorname{argmax}}{r(s_t,a)}$

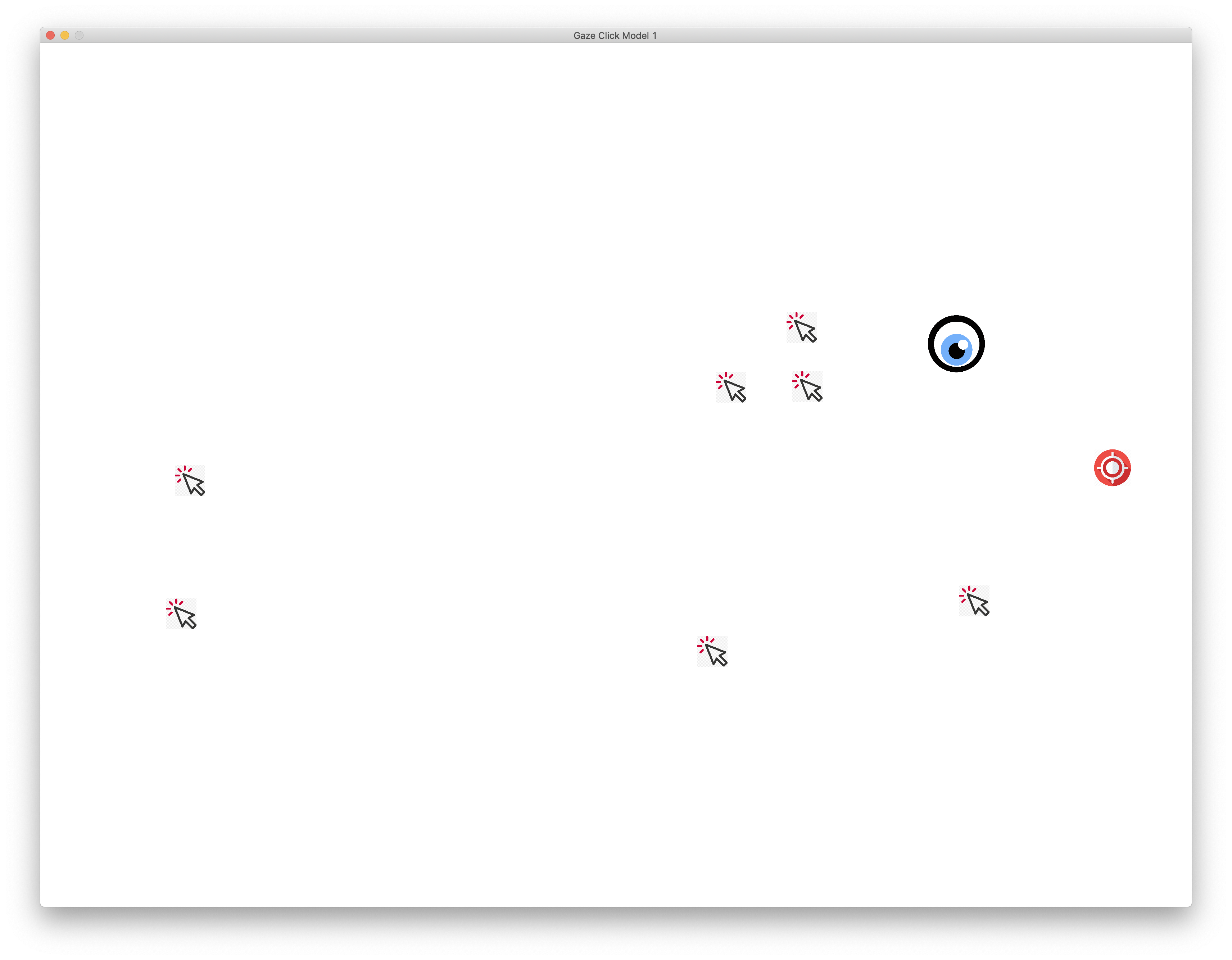

Major tasks of the tracker agent include:

- Adjust its speed to move closer to the eye.

- Stay in proximity with the eye and move as little (smooth) as possible.

- Predict a click and get rewarded if validated.

States

The entire screen is divided into a grid of:

- $X$ blocks horizontally, e.g. $X=12$ of $100$-pixel blocks

- $Y$ blocks vertically, e.g. $Y=8$ of $100$-pixel blocks

States are combintations of the following:

- Eye position in the grid $(e_x,e_y)$.

- Latest eye move $(\Delta_x, \Delta_y)$, or latest moving average.

- Tracker position in the grid $(x,y)$.

- Tracker current speed and direction, $(v_x, v_y)$, where $v_x, v_y \in [-2, -1, 0, 1, 2]$.

- Tracker average speed in the latest iterations $(\bar{v_x}, \bar{v_y})$

Actions

In each state, the cab can take any of the following actions:

- Accelerate or deccelerate on the $X$ dimension, $a_x \in [-1, 0, +1]$.

- Accelerate or deccelerate on the $Y$ dimension, $a_y \in [-1, 0, +1]$.

- Click and select (assist), e.g. after tracker speed remains slow in the latest iterations.

Rewards (or Penalty)

There are related rewards and penalties for each action:

- $-1$ point penalty for each action (e.g. change of speed).

- $20$ points reward for staying in the same block with the eye.

- $40$ points reward for predicting a correct mouse click, e.g. triggered by low speed in the latest iterations.